At ADAMAS, we’re passionate about helping the businesses we serve with computer systems compliance (CSC). But just what does that mean, and how has it changed over the years?

I had an interesting conversation recently with a QA colleague, reminiscing about the origins of CSC and the practices and principles contained in the so-called “Red Apple” guide’1 (named, it seems, because the FDA-sponsored workshop that established many of the principles in the guide was held at the Red Apple Conference Center near Little Rock, Arkansas, USA, in 1987).2

Although I’m showing my considerable age even mentioning Red Apple, “back in the day” I think it’s fair to say that CSC just meant “computer system validation” (CSV) and, if there was a formal systems development life cycle (SDLC) at all, most systems were developed according to a classic Installation/Operational/Performance Qualification model.

Documentation was often sparse, Agile had not yet come into widespread use, users had “requirements” or “specifications” rather than “stories”, and scrums were only ever encountered in a game of rugby,3,4 or maybe the New Year sales.

How times have changed. While “classic” CSV still forms the foundation of many organisations’ practices, CSC is now a much broader in scope. SDLCs have developed and multiplied, and there are now many different styles and iterations in use across our industry.

It would be remiss of me also not to recognise the influence of 21CFR11, and the fact that Good Automated Manufacturing Practice® (GAMP) is now a weighty tome, checking in at 352 pages. That’s not to mention the threats from cyberattacks including denial of service5 and the brilliantly-named “R U Dead Yet?” (RUDY)6 attacks, malware, ransomware and other potential data-security breaches.

Of course, we still need to confirm that our organisations and vendors have a robust SDLC, and that systems and software perform as intended – usually by conducting a range of validation audits for systems impacting on data integrity and subject safety.

However, reflecting on the increases in sophistication (and prevalence) of networks and applications, it’s clear that nowadays there are many more attention-worthy processes that support computerised systems. This is why it’s now a common regulatory expectation that organisations also have a comprehensive programme of CSC process audits in addition to validation audits.

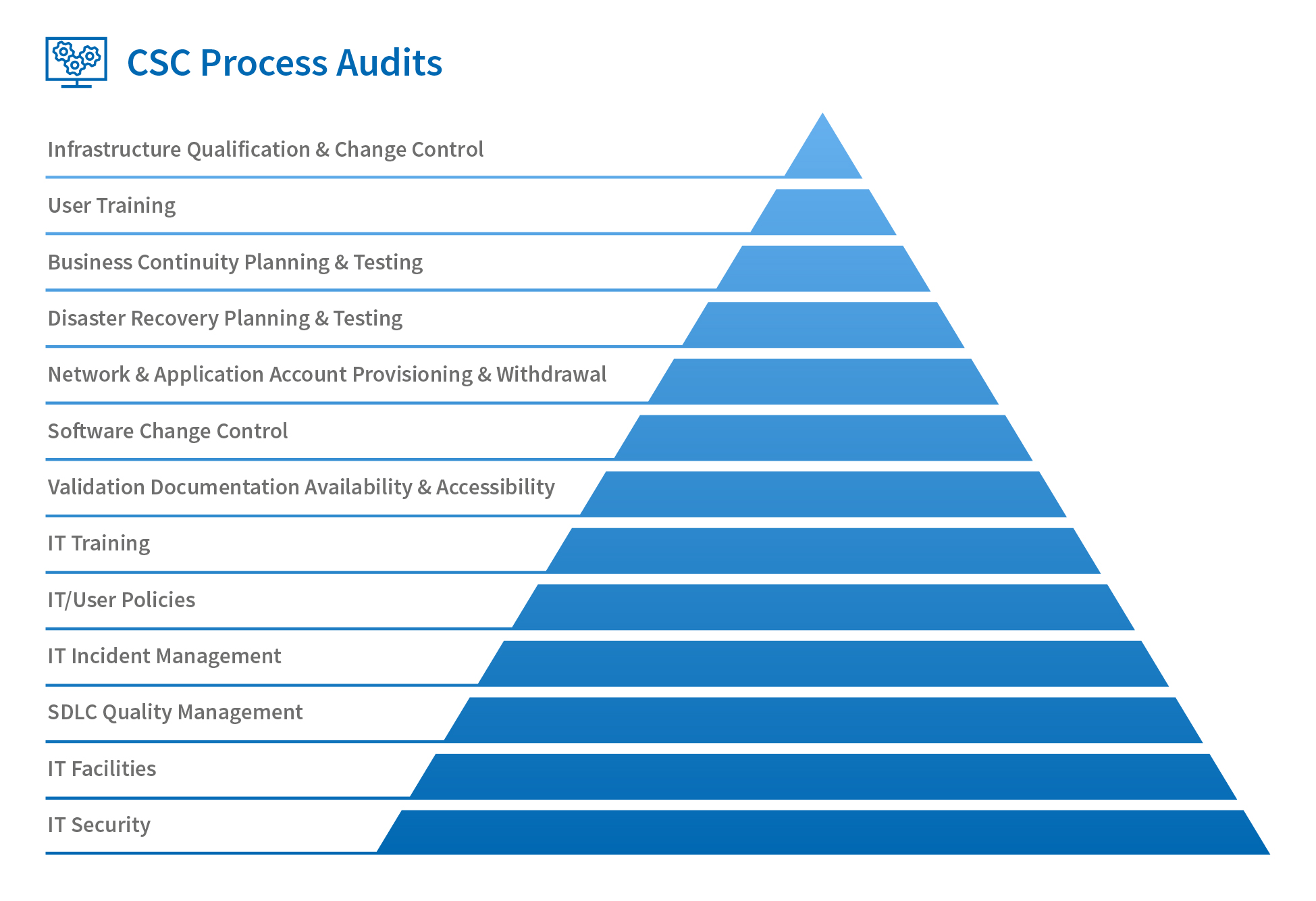

There are many areas that a robust internal process audit programme should encompass, as shown in Fig. 1 below, although this is not an exhaustive list; you should still evaluate the needs of your specific organisation, not least of which is IT security. This is a diverse topic that includes everything from hosting and account-provisioning arrangements to incursion testing and data encryption/transmission (I’ll cover this in more detail in another blog post).

Even the best CSV efforts can be undermined by an insecure network, application or data-exchange protocol. In my experience, this is a topic that is rarely subject to audit.

Only when we have all these aspects covered can we truly consider that we have an accurate picture of CSC in our own organisations and those of our suppliers.

Of course, the current IT and CSC landscape will develop further still. Current CSC activities are focused mainly on systems where outcomes are relatively predictable, and there is a significant “human” element – from programmers and developers to users and system/network admins.

But what about when computer systems are able to function independently? There are concerns from some of the planet’s greatest thinkers about what happens when artificial intelligence (AI) becomes more prevalent.7

It’s easy to see the beginnings of AI in our own industry. For example, many Interactive Response Technology systems have long been able to balance IP stock levels and re-route shipments without human guidance beyond their initial programming. Imaging technology already has automatic diagnostic and troubleshooting algorithms. Automated External Defibrillators (AEDs) diagnose and treat life-threatening cardiac arrhythmias of ventricular fibrillation and pulseless ventricular tachycardia with minimal, non-medically-trained human input.

As technology develops, how do we “validate” a system that can “think” and potentially make decisions relating to clinical trials with little or no human input?

For further advice on this or any other concerns relating to CSC, or for details of how ADAMAS’s CSC experts can help, please contact Matt Barthel at matt.barthel@adamasconsulting.com or on Tel: +44 (0)1344 751 210.

- 1 Computerised Data Systems for Non-Clinical Safety Assessments – Current Concepts and Quality Assurance, DIA, September 1988

- 2 Good Clinical, Laboratory and Manufacturing Practices: Techniques for the QA Professional, Philip Carson, Nigel Dent, October 31, 2007, Royal Society of Chemistry, §37.2.1 ‘Validation’ Becomes Essential

- 3 https://en.wikipedia.org/wiki/Scrum_(rugby)

- 4 https://en.wikipedia.org/wiki/Scrum_(software_development)

- 5 http://searchsecurity.techtarget.com/definition/denial-of-service

- 6 http://www.computerweekly.com/photostory/2240164369/Five-DDoS-attack-tools-that-you-should-know-about/3/RUDY-R-U-Dead-Yet

- 7 http://www.bbc.co.uk/news/technology-30290540

Matt Barthel

ADAMAS Consulting Ltd

Matt.Barthel@adamasconsulting.com